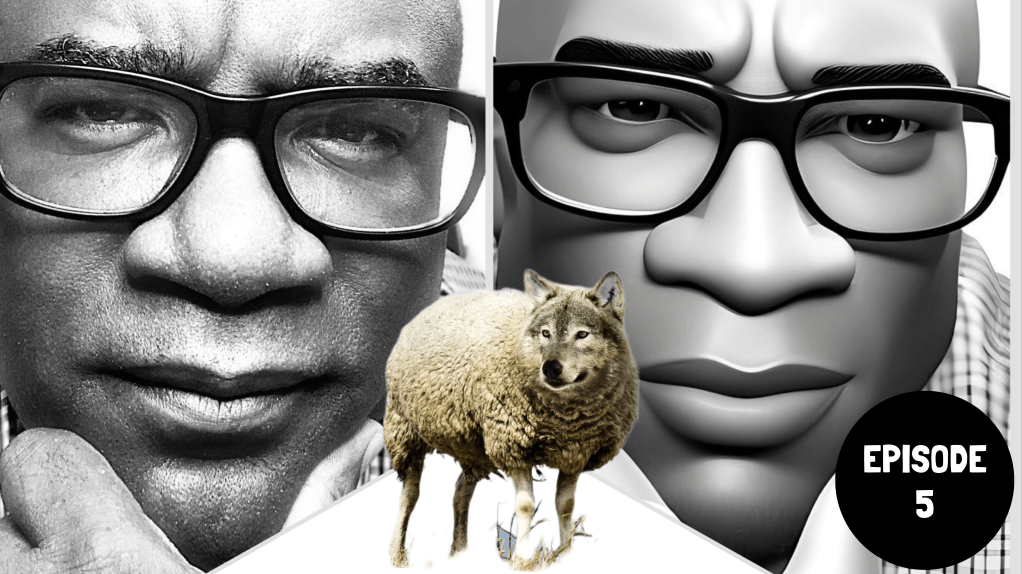

A Wolf in Sheep’s Clothing?

Artificial intelligence (AI) is creeping into every corner of the business world, including the insurance industry. This is especially true as it relates to claims. While it promises many benefits, let’s take a closer look at the ethical implications, effectiveness, and potential dangers. Like a sheep, it appears harmless, but brace yourselves, AI might just be the unexpected wolf crashing the claims party!

If you go by the McKinsey 2030 report, a lot of what we do day-to-day in claims will be automated (Balasubramanian, 2021). However, the risk of doing things faster, does not equal better. Even the National Law Review cites carrier’s concerns about AI’s use in claims in their May 16, 2023 article, “Insurance Industry Highlights Inconsistent Reliance on AI” (Michael S. Levine, 2023). According to Weply.com, only 5% of people would prefer to chat with a bot versus a real person (Do you prefer chatbots over humans?, n.d.).

There is a lot of excitement around AI in insurance. But what is often missed is the fact that insurance is an old business. It is a people business: The key word is “People”. One of the primary concerns about AI in claims adjusting is the potential loss of the human experience. Adjusters are trained to deal with the emotional and psychological impacts of a claim, which can be crucial in providing a satisfactory resolution for customers. AI lacks the ability to understand and empathize with customers, which can lead to a lack of trust and dissatisfaction.

Adjusting claims isn’t just about reviewing contracts like a robot reading a shopping list. Adjusters use their judgment and expertise to make decisions, considering policy language, coverage limitations, and legal requirements. It’s a delicate dance that AI may struggle with, like a toddler attempting a moonwalk. There’s a real risk that AI could miss vital details that a human adjuster would catch. And we all know what happens when you miss something important—a lawsuit waiting to pounce!

Another issue is whether customers are aware that their claims are being handled by AI. Transparency is essential in building trust between customers and insurers, and customers have the right to know who or what is handling their claims. While the California Consumer Privacy Act (CCPA) provides regulations for the disclosure of AI usage by businesses, it is not yet a federal requirement.

Lastly, there is a concern that AI could make data more vulnerable to cyber attack. As AI systems rely on large amounts of data, any breach in security could lead to a significant loss of sensitive information. Even though cybersecurity experts are working on developing solutions to improve AI security, it remains a concern.

AI can be a handy sidekick in claims adjusting, bringing efficiency and accuracy to the table. But we can’t just let it run wild like a pack of unruly puppies. We need to strike a balance between AI and the human touch, keeping the best of both worlds. And hey, let’s not forget the recent testimony from Sam Altman, the CEO of OpenAI, who practically begged Congress for some ethics in AI usage. Maybe we should gather all our insurance industry friends and toss this matter into a think tank before we go down a path of no return. A message to the industry: Guard your gates!

References:

Balasubramanian, R. (2021, Mar 12). Insurance 2030—The impact of AI on the future of insurance. Retrieved from mckinsey.com: https://www.mckinsey.com/industries/financial-services/our-insights/insurance-2030-the-impact-of-ai-on-the-future-of-insurance?cid=eml-web

Do you prefer chatbots over humans? (n.d.). Retrieved from weply.cha: https://weply.chat/blog/do-you-prefer-chatbots-over-humans

Michael S. Levine, K. V. (2023, May 16). Insurance Industry Highlights Inconsistent Reliance on AI. Retrieved from natlawreview.com: https://www.natlawreview.com/article/insurance-industry-highlights-inconsistent-reliance-ai

2 responses to “AI”

Artificial intelligence is taking over in today’s world but what happens when it crashes or breaks down. Then we turn to human being to repair it. We need AI in some cases but not all. AI uses our brains to advance certain tasks, like filling out job applications and sometimes it can lose data especially in the business world. I think we should not rely on AI completely always have a back up plan just in case and speak with a professional in person.

LikeLike

Excellent points made, Rod. The virus of A1 technology has been invading/infest the world for some time but becoming known/ exposed and now visible.

Similar to when the super high way called the internet became known and Y2K was going to be the end. Man keeps trying to exclude the human heart and relationships from the equation in all these enterprising ideas to gain power, money, and control, but you stated it clearly. A1 can’t “discern” nor truly “feel” only guess and predict based on the data it collects to drive the process. We who know the truth should be vigilant in our spheres of influence to arrest it and be ready for next. The insurance forest is a major part of this landscape for sure! Fight the good fight my Brother🙏🏾

LikeLiked by 1 person